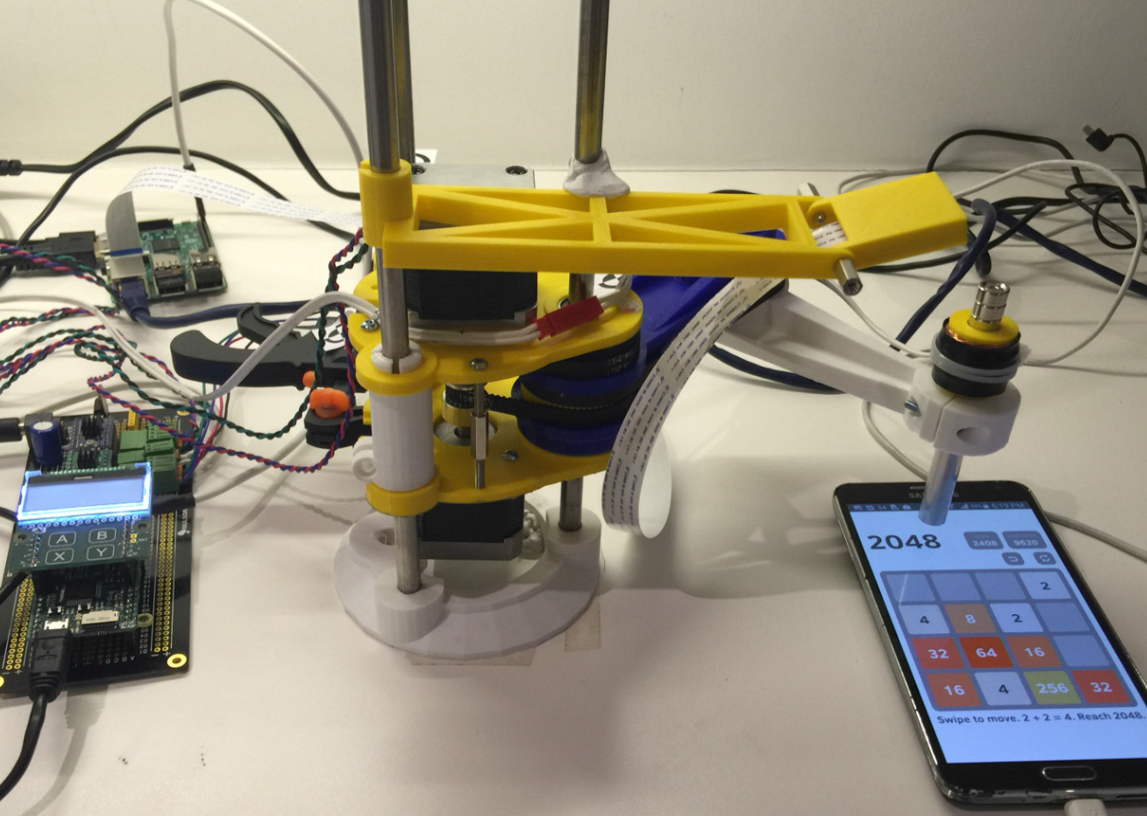

I’ve mentioned before that I’m working on a single-arm SCARA robot based on this design on Thingiverse. I still don’t feel totally confident in the correctness of my maths/code which drives the arm as the best circle I’ve produced with it still looks vaguely egg-shaped – more investigation is needed. But it has progressed enough to allow me to test other aspects of it out by playing a game on a smartphone.

The game I have chosen to experiment with is 2048 which started as an iOS app and has spawned a number of interesting clones including a Fibonacci version. I’m certainly not the first to do this but the main reason for choosing it as a test is that the game screen is relatively clean and the controls are a simple swipe in one of four directions.

If you just want to cut to the chase here it is on YouTube.

Elements

The elements I needed to bring together to make this work are:

- a Robot arm capable of moving a tool (pen, etc) over a region of a smartphone screen smoothly enough to be reliably detected as a swipe and controlling software to handle movement

- a “Finger” equivalent to use as the tool with the ability to move up and down quickly and be detected by the capacitive touch screen of the smartphone

- a camera mounted so that it can see enough of the smartphone screen to permit “reading” of the board position before each move

- software to interpret the camera image into a game board, compute a sensible next move and instruct the robot arm to make the movement necessary to play that move

As detailed before the robot arm I’ve made has its own control electronics and uses a MicroPython PyBoard to run the arm control code. Since the PyBoard probably isn’t an ideal place to run camera capture and image recognition software – maybe someone will refute this? – I decided to add a Raspberry Pi for the heavier lifting. So I made a serial-cable connection between the PyBoard and the Pi (fortunately both 3.3V logic) and wrote some code to drive the robot arm via slightly G-Code like syntax.

Electromagnetic Finger

Swiping and button-pressing on a capacitive touch screen can be achieved without a real finger as long as the implement has a conductive tip. I found a pen from a recent EIE conference which fitted the bill and dismantled the cap by removing the pocket clip. The full assembly is a little contrived but includes a spring to lift the pen-tip away from the screen and an electromagnet to force the pen-tip onto the screen to simulate a key press.

There is a 3mm bolt down through the magnets and a 3D printed expansion piece to grip the pen body. This then fits inside a coil which was wound around another 3D printed former to create the desired amount of pull. I wound around 2000 turns manually to achieve this! The wire is 0.2mm enamelled copper and has a resistance of around 36Ω so, using the 12V supply it draws around 300mA.

Camera and Z Axis

The camera is mounted directly onto the carriage assembly which works fine since the the robot arm currently doesn’t have a Z-axis (up/down) mechanism (I didn’t really feel that quick actions, like swiping on a touch screen, would work well with the lead-screw mechanism I had planned). So, for now, the Z position is held by clamping the carriage in place at the right height above the phone.

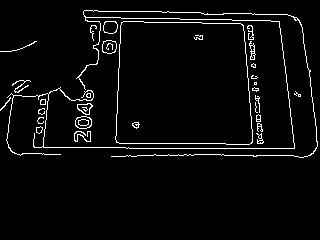

I decided it would be unrealistic to get the camera to be absolutely vertically above the smartphone display so the images the camera sees are like this.

So clearly in need of some correction to get the playing tiles to be square and readily identifiable by software. I tried out a few different approaches to identifying the playing area – so that I could correct the distortion of the camera image.

Extracting the Game Board

This area probably took the most time – mainly because this is a new area for me and there was a lot to learn. I used OpenCV which is well supported on the Raspberry Pi and using python it isn’t too difficult to get started with. I found a great deal of stuff on the internet about image recognition and practical tutorials like this helped greatly in understanding how to extract features of the game screen.

The first step was to correct the image and I tried a couple of approaches to this – neither of which have been totally satisfactory. The first approach I tried was as follows:

- Resize the image to 640 x 480

- Convert to greyscale and use Canny edge detection to create a monochrome image with only the edges visible (see below)

- Detect lines in this image using the Hough line detector (again see below)

- Start in the centre of the image and try to find a moderately rectangular shape with the mid-points of its sides reasonably close to equidistant from the opposite side

- Find the intersection (corners) points of this rectangle

- Use this rectangle to find a homography to correct the image

Although this approach worked ok but there were many occasions when the correct rectangle wasn’t found. So I decided to read up some more on the subject and found this tutorial which presents a different approach to the same problem.

Although this approach worked ok but there were many occasions when the correct rectangle wasn’t found. So I decided to read up some more on the subject and found this tutorial which presents a different approach to the same problem.

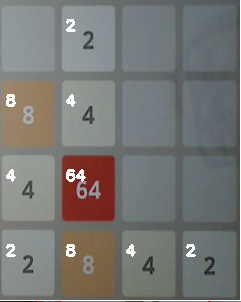

This approach has the same two first steps and the same final step but instead of detecting lines using the Hough line detector it detects Contours. This produces a hierarchy of potential contours and, by simplifying each contour, can allow a rectangle to be detected.

I found this approach to be more reliable, although that might just be because I persisted with it more, but the corrected image has a slight rotational error which might be due to the fact that the “rectangle” on the screen showing the game area has rounded corners.

An alternative which might result in the best of both worlds might be to combine the two approaches by using the line detector to resolve the straight sides of the rectangle – but I haven’t tried that yet and it may slow things down too much.

Detecting the Game Tiles

After resolving game board the final part of extracting the game state involved recognising each tile. My approach to this is very simple but seems to work:

- Split the game board into 16 “squares”

- Get the mean standard deviation of the colour in each square – if the standard deviation is low (< 7.5 is my current threshold) then it is blank

- Compare the tile to previously cropped tiles of each value and choose the one with the strongest correlation

This works pretty well assuming the lighting conditions and other factors such as rotation (see above) are under control.

Game Control Software

Having put a lot of effort into the game board recognition I implemented a very simple strategy to play the game, which, in summary is to go through each possible direction for movement and assess the direction which results in the largest combined sum or, if no combinations are possible, results in the maximum number of tile movements.

This strategy is clearly pretty dumb and there are some great examples of clever AI that have been applied to this game but, for my purposes, it works just fine.

The software for playing the game is here:

https://github.com/robdobsn/RobotPlay2048/

And the software for controlling the robot arm is here: